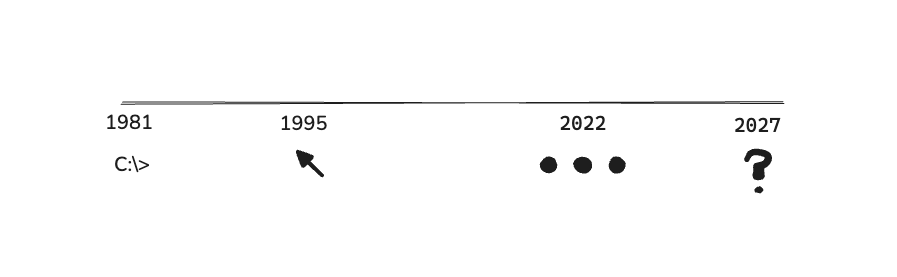

In the future, people will say: Imagine celebrating ChatGPT in 2025. Sure, It's impressive (paradigm-changing even), but it's also a bit like applauding MS-DOS in 1981: the system is powerful beneath the surface, but the whole thing hinges on a blinking cursor and cryptic commands of the prompt engineer. We haven't yet encountered our Windows 95 moment, the kind of intuitive, user-friendly leap that moves technology from niche fascination to essential infrastructure.

Why Interfaces Lag Behind Compute

Historically, computational breakthroughs race far ahead of interfaces. In the '70s, mainframes easily had enough horsepower for graphical interaction, but it took the Macintosh GUI in 1984 to make computing human-friendly. Multitouch screens were prototyped as early as 2002 at NYU, yet it wasn't until Apple's iPhone in 2007 that the average consumer finally experienced this revolutionary interface. The pattern is predictable: technology unlocks possibilities first; then interface designers figure out how to actually put it into human hands.

So today, we're in that same gap. Transformers changed the world in 2017, and ChatGPT debuted in 2022—yet our current AI interface remains stubbornly command-line/chat window: a sophisticated digital assistant controlled by typing short messages, back and forth, like a Unix terminal from the 1970s, but a lot prettier.

The interface revolution usually trails the tech by roughly a decade, so if we're timing it right, the mainstream AI interface will emerge around 2027–29. It hasn't arrived yet.

Licklider's Forgotten Dream

Back in 1960, J.C.R. Licklider, one of computing’s earliest and most insightful visionaries, predicted something he termed “man-computer symbiosis.” He wasn't envisioning simple question-and-answer mechanics. Instead, he dreamed of computers acting like intuitive partners: partners that are predictive, adaptive, and contextually aware, who working seamlessly alongside us. By Licklider’s standards, the current state of AI is less like a trusted colleague and more like a pager from the '90s: you summon it, it pings back, and you negotiate every step explicitly.

Right now, our AI interactions remain manual. We enter commands one by one; we are effectively batch processing our thoughts at typing speed. Real symbiosis, by Licklider’s design, would involve continuous, real-time cooperation. We haven't gotten there. We're still pinging, waiting, and instructing, rather than working collaboratively.

The Prompt Bottleneck

The bottleneck isn't the AI; it's us. Humans type at around 40 words per minute, roughly 3 kilobytes per second. Meanwhile, GPT-4o casually consumes 20 megabytes per second. That means 95% of our potential bandwidth with these intelligent systems is locked behind our comparatively sluggish fingers.

The solution isn't a faster typer; it’s an entirely new interaction model. Think of Gmail’s Smart Reply feature, where the interface watches you type and suggests quick responses. Extend that logic everywhere: your software constantly observes your cursor movements, clicks, context switching, eye-tracking, and calendar events. It proactively anticipates your needs. You don’t prompt the AI; the AI prompts you—gently, subtly, intelligently.

What the GUI Moment Will Look Like

We once transitioned from a cryptic command prompt (C:\> in DOS) to intuitive visual elements in Windows 95 (icons, menus, windows that opened visually intuitive interactions to millions).

Similarly, our AI interface will shift: from typing into a prompt (>>>) and reading text-based responses to proactive agents automatically interpreting multimodal signals (mouse movements, clicks, gestures, speech) then suggesting or executing actions seamlessly in context.

This won’t just be cosmetic. It will feel as fundamentally transformative as the jump from DOS to graphical desktops—maybe even more so.

The "AI Membrane" Wars of 2026

As we near this leap, tech giants will compete fiercely—not just over raw compute or AI models, but over how AI deeply integrates into everyday life. Apple’s Vision Pro 2 could track your gaze and gestures continuously, offering contextually relevant insights. Meta’s next-generation Ray-Bans might whisper suggestions directly into your ear, anticipating your needs based on visual and auditory context. Microsoft’s Copilot could watch and remember everything you see, surfacing relevant information proactively and intelligently.

These aren’t just products—they’re membranes, porous layers between humans and AI systems, capturing contextual signals and turning passive observation into active engagement. Whoever controls this "AI membrane" captures enormous value, redefining digital power dynamics for decades.

Regulation: Urban Planning for Digital Minds

As these interfaces evolve, regulation must become sophisticated—more akin to urban planning than traditional tech oversight. If done poorly, we risk repeating the spam email scenario, but with potentially devastating consequences like privacy violations or manipulation at unprecedented scales.

What might thoughtful regulation look like?

- Data sovereignty laws that define clearly which personal signals (eye-tracking, emotional cues, biometric data) can leave your device.

- Contextual boundaries where separate life contexts (professional, medical, personal) are protected from crossing streams inadvertently.

- LLM building codes, mandating transparency, secure logging, revocation protocols, and rigorous auditability.

Regulation can’t merely guard gates. It will design how we live safely within these new AI-infused environments.

Preparing for the Post-Prompt Reality

So how should businesses and developers prepare?

- Proactive Copilots: Develop interfaces that respond instantly (sub-150ms latency) to inferred user intent, rather than waiting for explicit commands.

- Context Instrumentation: Track non-verbal interactions (mouse movements, gaze, interactions with UI elements) to predictively understand intent.

- User Trust Loops: Allow users easy veto and correction mechanisms, feeding that feedback directly into nightly retraining and refinement loops.

- Structured Contracts: Treat tasks as well-defined JSON-style objects containing goals, constraints, and success metrics, rather than arbitrary text strings.

This isn’t just feature development; it’s shifting from reactive UI to predictive UX, paving the way for genuine human-AI collaboration.

What Comes Next

In my mind the timeline looks something like this:

- 2025–2026: Prompt-based interfaces dominate, the era of manual Copilots peaks.

- 2027: First mainstream proactive, multimodal interfaces debut.

- 2029: Explicit prompting becomes obsolete for most practical use-cases—typing commands feels as quaint as opening the DOS prompt today.

When explicit prompting is forgotten, replaced by fluid interaction, Licklider’s dream of man-computer symbiosis will finally arrive.