Christmas is in the air, the lights twinkle to ward away the darkness of winter and we sip mulled wine and laugh condensed laughs outside the packed pubs. It is a time that is deeply nostalgic, full of traditions. Many people get wistful and start making resolutions and plans for better next year. I propose in this short essay you need to make one new resolution coming into this festive period and 2025: write evals for everything.

"Hark!" the Herald Angel sings, "Not another tech evangelist!" But yes, this needs to be said. This year has seen so much tech development—some would say not fast enough, others would say anxiety-inducingly fast. Some people are living so far in the future they spend most of their time talking about artificial general intelligence, yet others say that the models aren't even intelligent and that it is a bubble. And all the average person knows is that the media is finally fully on the AI bandwagon for better or worse and it can be rather terrifying between projected job losses and that sense that you don't really know what it means for something to know everything that is on the internet...

Understanding Evals: Your Personal Benchmark

In the world of artificial intelligence, researchers use evaluations (evals) to measure what AI models can and cannot do. These aren't just academic exercises—they're practical tools for understanding capabilities and limitations. When a new AI model is released, researchers run it through these tests to see if it actually advances the field or just makes impressive-sounding claims.

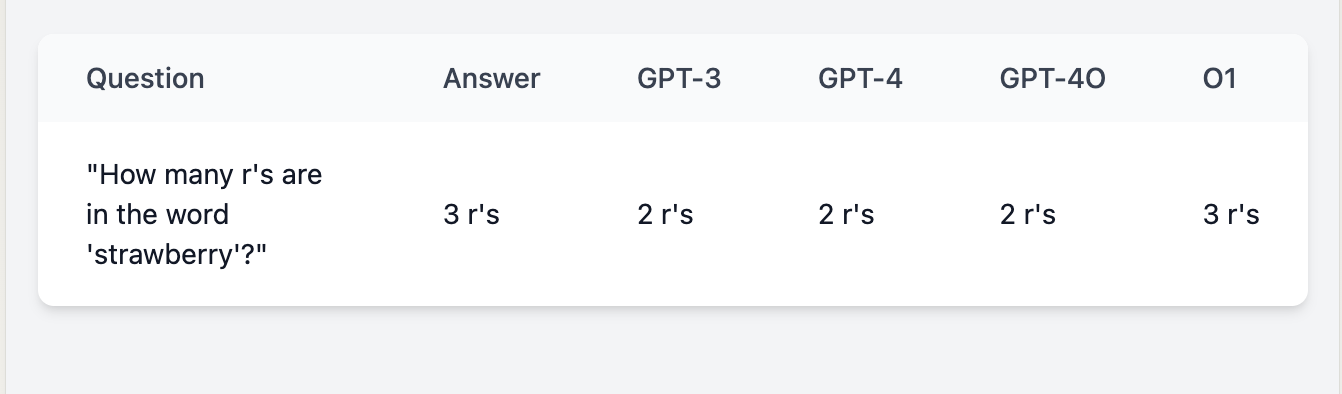

Let’s take a simple example from the world of AI. Imagine we’re testing how well different models can answer the question, “How many ‘r’s are in the word ‘strawberry’?” This might seem trivial, but it highlights key differences in performance. Here’s how a toy eval could play out:

An aside: It is crazy to think this has only been solved in the last few months—imagine what other things the model may or may not be able to do! This is not to say the models are silly or stupid, but it is saying that you wouldn’t have come across this question unless you had to test for it. What are your personal use cases that aren’t yet answerable by AI and what are the things you do constantly that are easily replicable by AI?

You can apply this same thinking to your own work and life. Instead of getting caught up in every new AI announcement or feeling like you're constantly falling behind, develop your own set of tests—your personal evals. These become your constant reference points in a rapidly changing landscape.

Creating Your Personal Eval Framework

Start by mapping out three key areas:

- What you know works well now

- What you'd like to automate or improve

- What seems just out of reach For example, if you're a writer, your eval might look like this:

- Current capability: "Can AI help me brainstorm article topics?"

- Automation goal: "Can it create a first draft that captures my voice?"

- Stretch goal: "Can it engage in meaningful editorial dialogue about my work?"

The beauty of having your own eval framework is that it grounds you. When a new AI model comes out, instead of wondering if you're missing out, you can run it through your personal tests. If it still can't pass the evals that matter to you, then perhaps that "breakthrough" isn't so revolutionary for your needs.

The Art of Better Questions

Creating effective personal evals requires asking the right questions:

- Start With Clarity

- What specific task am I trying to improve?

- What does success look like in measurable terms?

- How will I know if an AI tool is actually helping?

- Build in Measurement

- Track concrete outcomes, not just impressions

- Document both successes and failures

- Review and adjust your metrics regularly

- Stay Focused

- Choose a small set of crucial capabilities to track

- Don't try to evaluate everything at once

- Keep your tests relevant to your actual needs

- Look for Patterns

- What consistently works or doesn't work?

- Where do you find yourself making the same evaluations?

- What surprises you about the results?

Making It Work: Practical Steps

- Create Your Baseline

- List the tasks you do regularly

- Note which ones you think AI might help with

- Document your current process and pain points

- Design Simple Tests

- For each task, create a clear "can it or can't it" test

- Include edge cases you commonly encounter

- Make sure the test reflects your real-world needs

- Keep Track

- Document your test results

- Note which AI tools you've tried

- Record both successes and failures

A Different Kind of Holiday Reflection

As we wrap up another year, instead of making vague resolutions, try building your personal eval framework. What matters to you? What do you want to improve? What would make a meaningful difference in your work and life?

Remember, the point isn't to chase every new development. It's to know what matters to you and have a way to test if new tools actually help you achieve those goals. When you have your own standards, you can step off the endless treadmill of trying to keep up with every new announcement.

Your evals become your compass, helping you navigate the flood of new tools and technologies. They help you distinguish between what's truly revolutionary for your needs and what's just impressive on paper.

As we head into 2025, give yourself this gift: the clarity to know what matters to you, the tools to test what works, and the confidence to stay focused on your own path forward.

Happy evaluating, and happy holidays.