Welcome to Mines and Rabbit Holes #2. It is great to say that there is indeed a follow-up and I hope you enjoy!

Speaking of mines...

Anti-AI or Canaries in the Coal Mine

Lately, in the news and in the blogosphere, there has been a lot more anti-AI sentiment—the rise of clanker, a mix of some true tragedies, underwhelming model releases with GPT 5, absurdly good model releases with Nano Banana that feel like they are threatening the compositing profession, and new reports on the efficacy of AI projects.

MIT recently released a paper saying that 95% of AI pilots are failing whilst Stanford released a paper stating since 2022, workers aged 22–25 in high-AI-exposure jobs—like accounting, software development, and customer service—have seen a notable drop in hiring (-13%).

I think there are issues with both papers, particularly the Stanford release—at least a confusion of causation and correlation:

- Hiring slowdowns in late 2022–2023 line up more with post-COVID tech layoffs and macroeconomic contraction than with AI adoption.

- The SWE benchmark was created in 2023, so understandably it was low; also we have a lot of training leakage and Goodhart law-ism.

I wonder:

- why it isn't measuring outsourced work more (which arguably tends to be more amenable to automation) and

- let's be very fair... no one was replacing people with AI in 2022/2023, and these large firms that would be hiring weren't really moving past POC until 2024. And let's not forget the MIT paper states that 95% of pilots are failing...

But we can consider this paper a canary in the coal mine - this is the first time a paper released on the topic is being widely believed, and it sounds like we are moving in this direction.

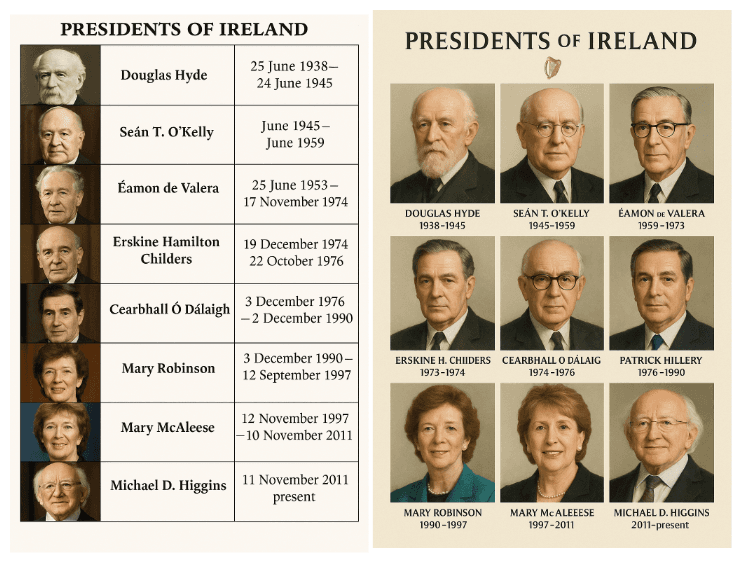

I'll leave it to the discretion of my eagle-eyed readers to figure out what's wrong with both of these AI images (created using GPT5). This is surprisingly better than the generation of the American presence that went viral on X.

The Product Era of 💅

Despite my recent article "AI and Mental Masturbation" and the model's patchy ability to produce all the Irish Presidents (based on Gary Marcus' post), I am still quite tech-positive and overall, I'm very optimistic about what we can use this technology for: even if the models slow down and we don't achieve true thinking in the way that Kenneth Stanley talks about, and we're left with this fractal AGI, there are still so many things we can build and do and ameliorate.

I am a longtime subscriber to Daniel Miessler's blog, it is truly a high-alpha technical blog. I've been very excited to work through his articles lately, describing his personal AI assistant, and I've been in the process of building my own. Ultimately, for many tasks, we do have functional AGI right now and if we make the best out of it, we can turn into AI solution factories.

If you are looking for some predictions about the future, Andrew Ng has been rather correct in the past, and perhaps he will be correct again. Because one thing we know is the fraction of work that humans can actually complete is very small relative to all the work that can possibly be done. AI will indeed amplify the amount of work we can do, and it's all how we use it.

And there is now a framework to start understanding how these products and techniques can be measured:

The KISAC Framework: Measuring Intelligence Task Performance To understand why AI can expand both dimensions so dramatically, consider what makes someone good at Intelligence Tasks:

- 📘 Knowledge — All the information, training, and experience

- 🧠 Intelligence — Ability to find patterns and generate insights

- 🕰️ Speed — How many tasks completed per time period

- 🔎 Accuracy — Correctness and error rates

- 💶 Cost — Total expense to employ and maintain

—The Area Under the Curve How AI Expands Human Work Capacity

The Shape of The System and Antifragile Processes

Antifragile orgs don’t treat documentation as a chore. They capture expertise as a byproduct of real work:

- Automatic ingestion: AI parses Slack, tickets, PRs — no extra keystrokes required.

- A living knowledge graph: Concepts connect across systems. Gaps surface automatically.

- Feedback loops: Every solved ticket updates the system. Answers get smarter over time.

One of my favourite concepts that I've ever come across is antifragility as coined by Nassim Taleb in Antifragile: something which benefits from disorder. As I previously wrote, "Train the model; train your team" the imperative is now to track and elucidate processes in order to offload as much as possible to AI. In doing so, we are also building more robust and potentially antifragile teams.

To really get the best out of these systems, we have to realise that we're dealing with unstructured data mostly: we still have to get it into structured formats in order to make use of them in our more deterministic systems. Anson Yu writes a very interesting article about how to structure your system to allow for AI agents and uses education's favourite structure known as Bloom's Taxonomy to do so. I think there's still a lot of work to be done on taking the unstructured output and generating structured outputs... Pydantic and Zod or other typing layers go a long way, but to be able to ask for specific outputs without affecting the quality of the model by virtue of putting those handcuffs on it are still something that hasn't been fully addressed.

Cúinne na Gaeilge

I'm always looking for some new bloggers to read in Irish. Recently, I translated into Irish a new Paul Graham article on the shape of essay field, and also wrote a piece on how to translate—or not translate—Clanker.

It can't be stated enough that one of the most important things you can do in life is to think deeply, and it is the goal of Mines and Rabbit Holes to really help me consolidate and review what I've been thinking deeply about in the last couple of weeks. In many ways, this (irregular) periodic is putting together "thinking more quickly" and "curiosity and the compression imperative". On any of the points above, I welcome any feedback, critiques, or further links. Because it's not just the models that need to grow smarter, we need to also :]